Book Review - Data Feminism

Introduction

Data Feminism is writen by Catherine D’Ignazio and Lauren Klein.

If I were asked what a feminist is prior to reading this book I’d probably say something similar to Merriam-Webster’s (and apparently Beyonce’s) definition: “a person who believes in the political, social, and economic equality of the sexes.”

Post reading Data Feminism… well the definition above still works but, apparently we need to include being an antiracist, having keen data literacy skills, and continuous improvement. In the book, feminism is a scientific discipline, and that’s not a bad thing. The scientific method strives for continuous learning and rejection of ideas we can prove to be false.

At first I didn’t understand why a book on feminism would focus so much on racial issues and injustices, but after reading this book I understand that “a rising tide floats all boats”. Advocating for one can help us all. Uncovering and countering biases or injustices in one area of the world will teach us how to notice and counter them in others.

Just as “science” is a continual process, feminism might be an ideal we can aspire to but never fully reach as I don’t think we will ever live in a world entirely free of injustices.

Prior to reading the book I read a few reviews on Goodreads to get a feel for what I was getting into. One reader complained that many of the best practices in this book, are just that, standard best practices in science. I at times struggled with the same thinking. Is understanding where your data comes from a feminist practice or just a good one? “Doing unto others” doesn’t make someone a Christian, but someone who doesn’t shouldn’t call themselves one. I don’t think we can expect everyone who believes in feminism to be data literate scientists who know the provenance of their data intimately, and I don’t think the authors do either despite the content of this book.

As someone who didn’t know much about intersectionality prior to reading this book, the initial chapters were a little hard to read simply because of the language associated with this school of thought. The authors were kind enough to define many of the terms and I did my best to follow everything, but I’m still unsure as to why the word bodies is used instead of people so many times.

Once I made my way through the more esoteric second chapter I was rewarded with a thoughtful and technical discussion of not just women’s issues but human issues.

Rather than conducting a chapter by chapter analysis, below are some of my favorite points from the book:

Garbage in garbage out

Data feminism was written in 2020, we’re living in a new world now. The now famous story about Target detecting a young woman’s pregnancy before her parents almost seems quaint in 2024. Since then AI has gotten a lot more creepy and things are accelerating.

When I call customer service lines I speak to scary good AI sometimes, and many companies are now using asynchronous interviews screened by AI to pass/fail candidates.

Anyways, as the saying goes garbage in garbage out or more pertinently bias in bias out.

I think in our current world order most (or at least I hope) companies and governments aren’t explicitly trying to discriminate with their data products.

In the power chapter, the authors share a discussion of how algorithms that don’t consider race may discriminate by using variables which, unfortunately do correlate with race like having friends or family who have been arrested or growing up in a single parent household (both of which also apply to me actually).

It’s understandable that a company would want to maximize profits for shareholders, but even from a purely financial standpoint there has been widespread criticism of corporations irresponsibly eschewing long term sustainable growth in favor of short term profitability. Executive bonus structures are partly to blame (I can see the Vox article now, are bonuses racist?).

An algorithm that minimizes risk also limits rewards intrinsically.

I’m reminded of a former employer of mine recently facing accusations of racism in lending in lending due to their own lending practices (note that I don’t know how they arrive at lending decisions currently, but I did work in the loan department).

Absence of data does not imply absence of an issue

This may seem like common sense, but good ideas often do. I say “why didn’t I think of that all the time”.’

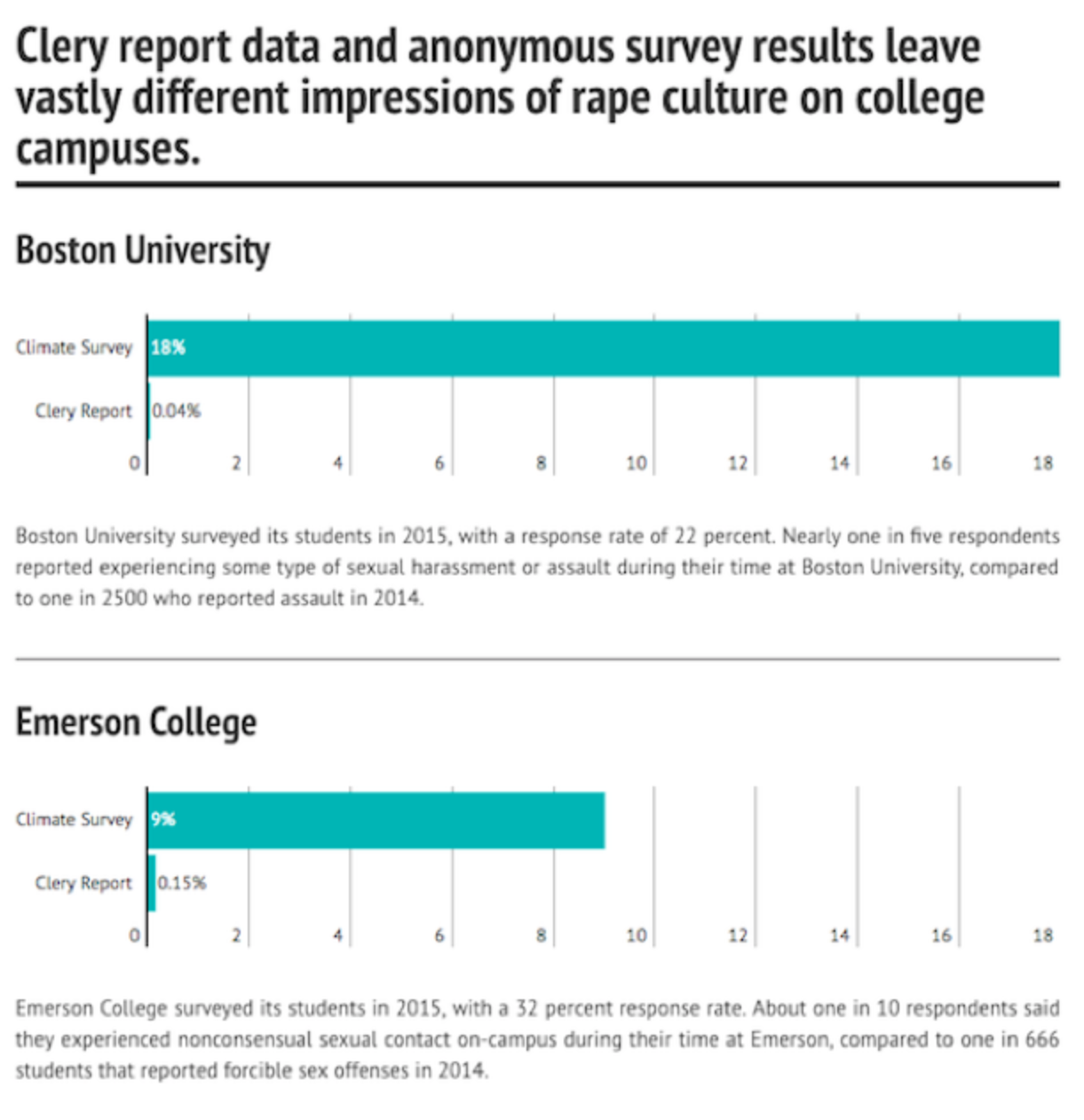

One thing that really stood out to be was a discussion of sexual assault statistics on College campuses.

The Jeanne Clery Act which requires Universities report sexual assault statistics publicly was passed after Jeanne, a College student was brutally raped in her dorm room in 1986.

Williams College a small liberal arts College appeared to have higher rates of sexual assault than Boston University, an urban research University.

The fact is that Universities self-report this information and William’s College appears to have a higher rate of sexual assault because it takes sexual assault seriously and fosters an environment where survivors feel comfortable reporting cases.

As my boss likes to say “think logically”when looking at financial data, you shouldn’t just report something as true because that’s what the data says.

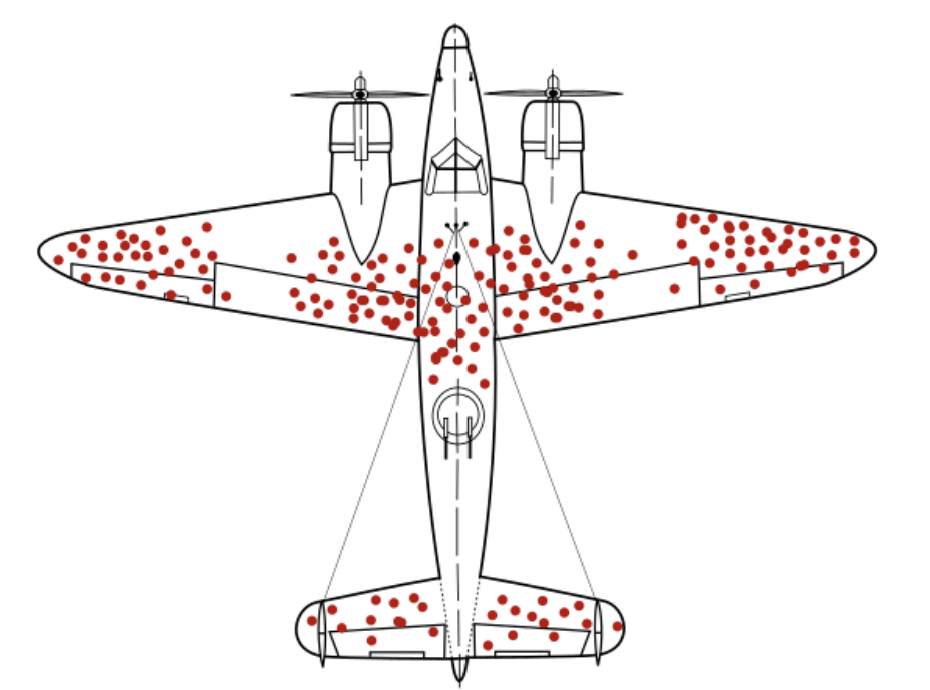

In a way, although not exactly the same, this reminds me of the story of survivorship bias of World War II bombers. Someone then was smart enough to realize that the undamaged sections on surviving planes was where extra support was needed.

Citizen data scientists

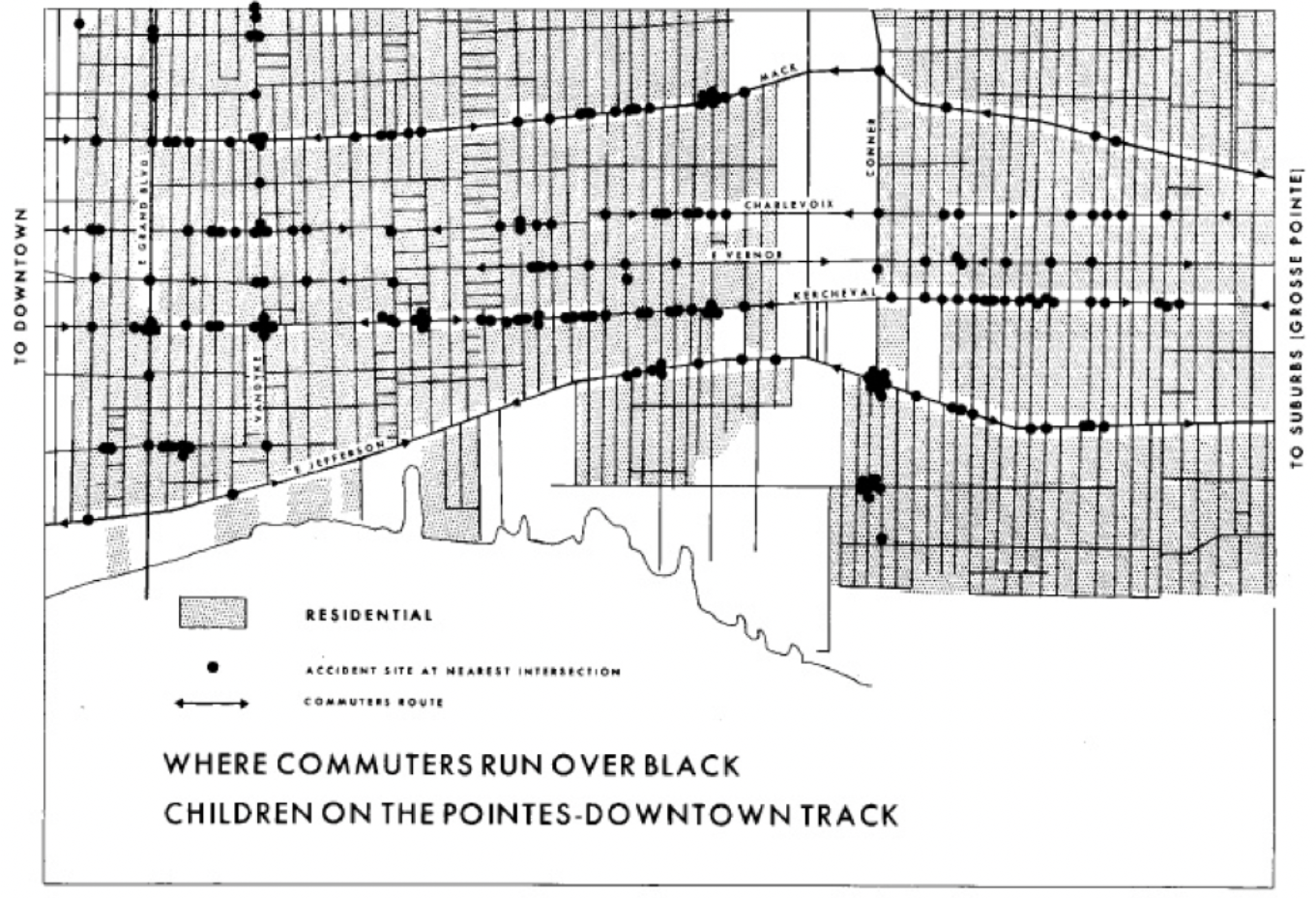

Data Feminism shares a few stories of communities that came together to solve or highlight problems with data. My favorite was the story of Gwendolyn Warren who in 1970 lead efforts in her Detroit community along with some academic Geographers to create a map of locations where black children were run over by commuters in their neighborhood.

Microsoft has a concept of a citizen developer, this is someone who doesn’t have formal training in computer science or software development but with their latest, GUI based data tools anyone with just a little training can develop applications or analyze data.

Though the rise of generative AI is somewhat scary, it is lowering the bar to creating data work significantly and I believe we will see a stark rise in the number of these community inspired analyses.

While Gwendolyn Warren could be considered a citizen data scientist in 1970 I suspect generative AI will give rise to many more like her.

A final random thought

I live next to Coral Gables, Florida, a suburb of Miami. One thing you notice as you cross into Coral Gables is that all the street signs are gone, replaced by stones on the ground. I have no idea if this is true, but an architect friend of mine once told me that this was done purposefully to disorient outsiders and make it obvious who didn’t belong. The excerpt below reminded me of this anecdote: